Obligatory shilling for unar, I love that little fucker so much

- Single command to handle uncompressing nearly all formats.

- No obscure flags to remember, just

unar <yourfile> - Makes sure output is always contained in a directory

- Correctly handles weird japanese zip files with SHIFT-JIS filename encoding, even when standard

unzipdoesn’t

I’m an

atools kinda persongonna start lovingly referring to good software tools as “little fuckers”

Happy cake day!

cheers!

What weird Japanese zip files are you handling?

Voicebanks for Utau (free (as in beer, iirc) clone of Vocaloid) are primarily distributed as SHIFT-JIS encoded zips. For example, try downloading Yufu Sekka’s voicebank: http://sekkayufu.web.fc2.com/ . If I try to

unzipthe “full set” zip, it produces a folder called РсЙ╠ГЖГtТPУ╞Й╣ГtГЛГZГbГgБi111025Бj. But unar detects the encoding and properly extracts it as 雪歌ユフ単独音フルセット(111025). I’m sure there’s some flag you can pass tounzipto specify the encoding, but I like havingunarhandle it for me automatically.Ah, that’s pretty cool. I’m not sure I know of that program. I do know a little vocaloid though, but I only really listen to 稲葉曇(Inabakumori).

I know inabakumori! Their music is so cool! When I first listened to rainy boots and lagtrain, it made me feel emotions I thought I had forgotten a long time ago… I wish my japanese was good enough to understand the lyrics without looking them up ._. I’m also a huge fan of Kikuo. His music is just something completely unique, not to mention his insane tuning. He makes Miku sing in ways I didn’t think were possible lol

I get you, I want to learn more Japanese. I only understand a very small amount at this point. I don’t have any Miku songs that I have really wanted to listen to, but that could change. I might check out Kikuo then. Also I love the animations Inabakumori release with their songs too. They have some new stuff that’s really good if you haven’t checked it out yet.

The same thing with zip, just use “unzip <file>” right?

.fitgirlrepack

.tar.gz

This guy tar balls

.zlibZSTD FTW

Damn you guys borrowing consonants from polish names now?

Wut?

I think it’s a joke about how people from Poland have a lot of consonant letters in their name, particularly the letter Z. This appears strange to non-poles, and thus became the subject of many jokes. So ZSTD looks a little bit like a Polish name in the sense that it’s made up of many consonant letters, including a Z.

More examples of people making fun of Polish names:

Here is an alternative Piped link(s):

https://www.piped.video/watch?v=AfKZclMWS1U

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

I’m the weird one in the room. I’ve been using 7z for the last 10-15 years and now

.tar.zst, after finding out that ZStandard achieves higher compression than 7-Zip, even with 7-Zip in “best” mode, LZMA version 1, huge dictionary sizes and whatnot.zstd --ultra -M99000 -22 files.tar -o files.tar.zstYou can actually use Zstandard as your codec for 7z to get the benefits of better compression and a modern archive format! Downside is it’s not a default codec so when someone else tries to open it they may be confused by it not working.

That is an interesting implementation of security through obscurity…

How does one enable this on the standard 7Zip client?

On Windows, it’s easy! Unfortunately, on Linux, as far as I know, you currently have to use a non-standard client.

If you download and extract the tarball as two separate steps instead of piping

curldirectly intotar xz(for gzip) /tar xj(for bz2) /tar xJ(for xz), are you even a Linux user?the problem is if the connection gets interrupted your progress is gone. you download to a file first and it gets interrupted, you just resume the download later

I download and then tar. Curl pipes are scary

They really, really aren’t. Let’s take a look at this command together:

curl -L [some url goes here] | tar -xzSorry the formatting’s a bit messy, Lemmy’s not having a good day today

This command will start to extract the tar file while it is being downloaded, saving both time (since you don’t have to wait for the entire file to finish downloading before you start the extraction) and disk space (since you don’t have to store the .tar file on disk, even temporarily).

Let’s break down what these scary-looking command line flags do. They aren’t so scary once you get used to them, though. We’re not scared of the command line. What are we, Windows users?

- curl -L – tells curl to follow 3XX redirects (which it does not do by default – if the URL you paste into cURL is a URL that redirects (GitHub release links famously do), and you don’t specify -L, it’ll spit out the HTML of the redirect page, which browsers never normally show)

- tar -x – eXtract the tar file (other tar “command” flags, of which you must specify exactly one, include -c for Creating a tar file, and -t for Testing a tar file (i.e. listing all of the filenames in it and making sure their checksums are okay))

- tar -z – tells tar that its input is gzip compressed (the default is not compressed at all, which with tar is an option) – you can also use -j for bzip2 and -J for xz

- tar -f which you may be familiar with but which we don’t use here – -f tells tar which file you want it to read from (or write to, if you’re creating a file). tar -xf somefile.tar will extract from somefile.tar. If you don’t specify -f at all, as we do here, tar will default to reading the file from stdin (or writing a tar file to stdout if you told it to create). tar -xf somefile.tar (or tar -xzf somefile.tar.gz if your file is gzipped) is exactly equivalent to cat somefile.tar.gz | tar -xz (or tar -xz < somefile.tar – why use cat to do something your shell has built-in?)

- tar -v which you may be familiar with but which we don’t use here – tells tar to print each filename as it extracts the file. If you want to do this, you can, but I’d recommend telling curl to shut up so it doesn’t mess up the terminal trying to show download progress also: curl -L --silent [your URL] | tar -xvz (or -xzv, tar doesn’t care about the order)

You may have noticed also that in the first command I showed, I didn’t put a - in front of the arguments to tar. This is because the tar command is so old that it takes its arguments BSD style, and will interpret its first argument as a set of flags regardless of whether there’s a dash in front of them or not. tar -xz and tar xz are exactly equivalent. tar does not care.

Thanks for the explanation, I might use more pipes now. Is it correct, that tar will restore the files in the tarball in the current directory?

It’s not scary from the flags, but rather what is inside the tar/zip.

I use .tar.gz in personal backups because it’s built in, and because its the command I could get custom subdirectory exclusion to work on.

Im a pirate, I am rar like, roawr, lol

most of the things i want to send around my network in archives are already compressed binary files, so i just tar everything.

You reinvented zip and didn’t even know it.

tarwas nearly and adult whenzipwas born.See my reply here: https://midwest.social/comment/10257041

The two aren’t really equivalent. They make different tradeoffs. The scheme of “compress individual files, then archive” from GP is what zip does. Tar does “archive first, then compress the whole thing”.

The link does not load for some reason, but tar itself does not compress anything. Compression can (and usually is) applied afterwards, but that’s an additional integration that is not part of Tape ARchive, as such.

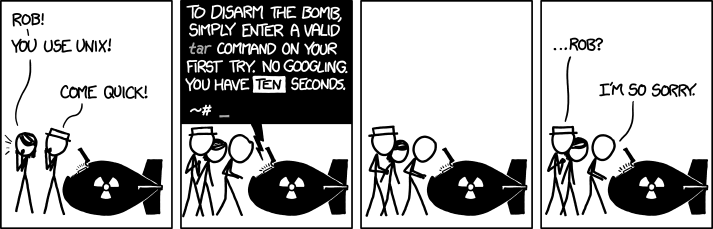

Oblig. XKCD:

tar -hEdit: wtf… It’s actually

tar -?. I’m so disappointedboom

tar eXtactZheVeckingFile

You don’t need the v, it just means verbose and lists the extracted files.

You don’t need the z, it auto detects the compression

That’s still kinda new. It didn’t always do that.

Per https://www.gnu.org/software/tar/, it’s been the case since 2004, so for about 19 and a half years…

Telling someone that they are Old with saying they are old…

Something something don’t cite the old magics something something I was there when it was written…

Right, but you have no way of telling what version of tar that bomb is running

Yeah, I just tell our Linux newbies

tar xf, as in “extract file”, and that seems to stick perfectly well.

You may not, but I need it. Data anxiety is real.

Me trying to decompress a .tar file

Joke’s on you, .tar isn’t compression

That’s not going to stop me from getting confused every time I try!

tar -uhhhmmmfuckfuckfuck

That’s yet another great joke that GNU ruined.

without looking, what’s the flag to push over ssh with compression

scp

tar -xzf

(read with German accent:) extract the files

Ixtrekt ze feils

German here and no shit - that is how I remember that since the first time someone made that comment

Same. Also German btw 😄

Not German but I remember the comment but not the right letters so I would have killed us all.

That’s so good I wish I needed to memorize the command

man tar

you never said I can’t run a command before it.

The Fish shell shows me just the past command with tar

So I don’t need to remember strange flags

So I don’t need to remember strange flagsI use zsh and love the fish autocomplete so I use this:

https://github.com/zsh-users/zsh-autosuggestions

Also have

fzfforctrl + rto fuzzy find previous commands.I believe it comes with oh-my-zsh, just has to be enabled in plugins and itjustworks™

one of the cool things about tar is that it’s hard link aware

.tar.xz

☠️

tar cvjf compressed-shit.tar.bz2 /path/to/uncompressed/shit/

Only way to fly.

I stopped doing that because I found it painfully slow. And it was quicker to gzip and upload than to bzip2 and upload.

Of course, my hardware wasn’t quite as good back then. I also learned to stop adding ‘v’ flag because the bottleneck was actually stdout! (At least when extracting).

for the last 14 years of my career, I was using stupidly overpowered Oracle exadata systems exclusively, so “slow” meant 3 seconds instead of 1.

Now that I’m retired, I pretty much never need to compress anything.

I’m curious about the contents in your compressed shit.